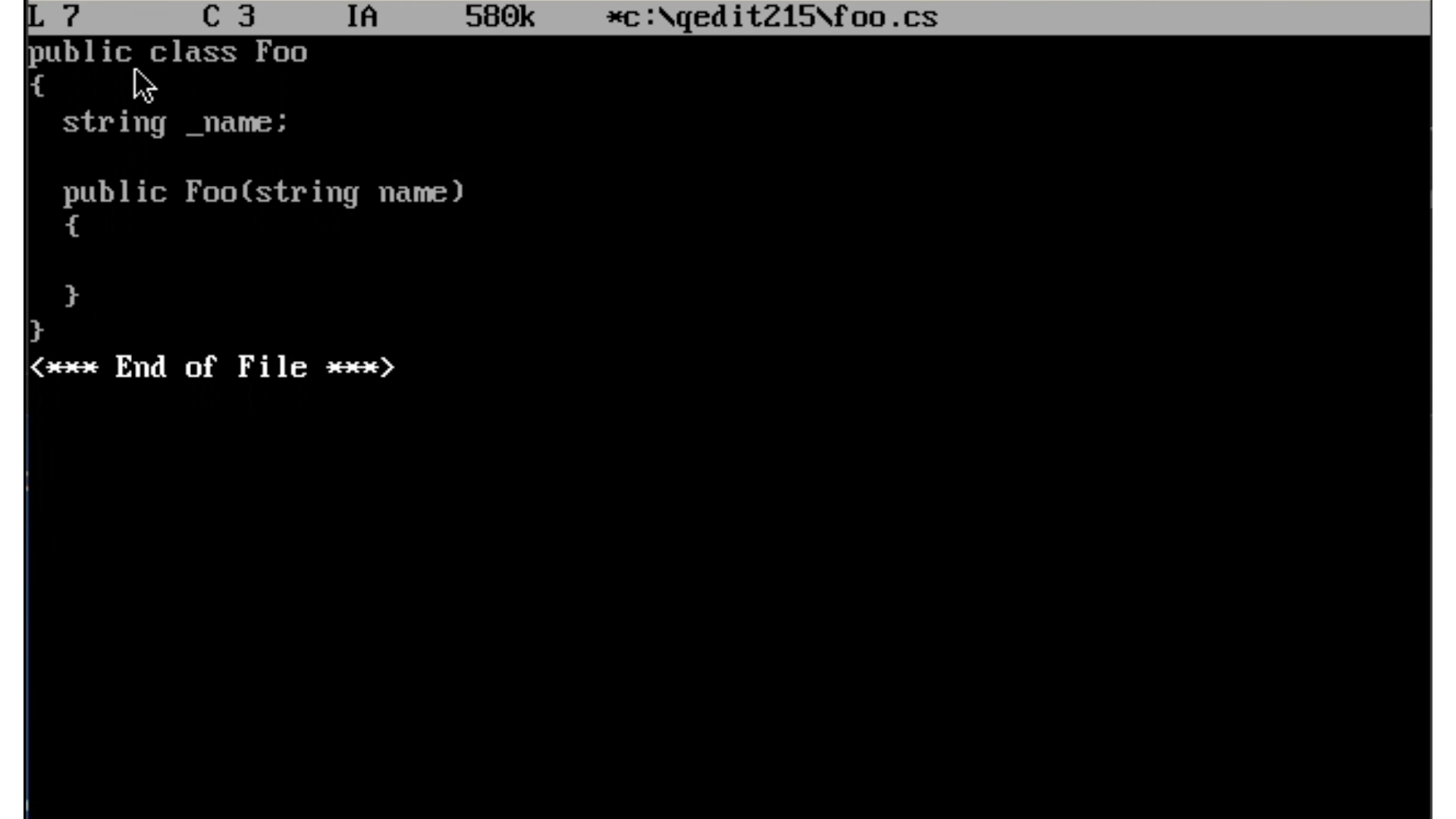

In the start of my career, the most valuable tool I had was a text editor. I used the amazing QEdit (later called SemWare Editor) that I wrote code from 1985 till I moved to Windows development in the 1990s.

This editor influenced the rest of my career. I expected every editor after qedit to be instantly responsive and defer to my typing instead of helping me along. This meant, I have always been resistant to having an IDE help me code.

For my C# projects, Visual Studio has always been a little busy with Intellisense. I’ve actually got a video on how to quiet Visual Studio.

But it’s not just Visual Studio, Resharper is a go-to tool that many C# developers use to help them code and refactor. It’s a great tool, but reducing it’s noise was harder than it should be. So, like Intellisense, I shunned Resharper in my IDE for the most part.

When I started to use VS Code, I really liked that the editor was very slim and fast — but over time, plugins and additions to the tool made it more an more uncomfortable.

Then came Copilot. By default, I really hated the integration with Copilot. I was never interested in typing a few characters and build an entire function. The number of popups in Visual Studio and Code as they tried to help me was constantly in my way. My cry was “Just Let Me Type!” So, it’s taken me a while to come to terms with it all.

Let me say that I believe, unequivocally, that AI code models are here to stay.

The integrating of AI models into tooling can help developers in some really great ways. But…for me…I want to keep the AI tools out of the editor; kinda.

Vibe coding; prompt engineering; and the end of developers — I think are all hyperbole. I think we’re getting away from how these tools can really help us. But, I think that AI chats are way more effective to help me build apps.

AI Chats allow me to ask questions and build code or make changes to my code in a really effective way. I still think the AI is best at building the start of a project (in lieu of scaffolding) and refactoring a project than actually building full-fledged solutions.

Just because you can describe a whole system and have it generated (and then, hopefully validated by humans) doesn’t mean you should. It reminds me of how we were building code earlier in my career.

In the early 1990s, building code was expensive. Planning was crucial to successful projects. Because we didn’t have reliable garbage collection and security validation (and the use of unmanaged pointers), we needed to plan out or projects very carefully.

This was the era of Waterfall Development. You spent a significant amount of time writing a specification. You spent more time to validate the assumptions in those specifications. Only then, did you actually start writing code. Because of the limitations in the languages, tooling, and talent, this worked well. Not perfect, but well.

In the mean time, we’ve moved beyond that to be able to write code and refactor changes as the tooling has improved. Write code and break things as well as writing tests and validating the code, not the spec is now the norm. Many readers of this blog probably can’t imagine how any projects got finished. They’re not completely wrong.

That brings us to today. By embracing vibe coding and prompt engineering, we’re back to writing specs. If you want the AI to build the project, you really have to know what you are building and how you are building it in order to have a decent shot generating the right kind of code. Is that really what we want? I am not sure.

So, what do you think? Am I just an old dude with antiquated ideas? Maybe. Let’s have that discussion (either by reaching out on Bluesky or commenting on this post). I’m ready to learn. I am not convinced I am correct.